Knowledgeable’s Ranking

Professionals

- Included keyboard and pen

- Good show

- Lengthy battery life

Cons

- Sluggish efficiency

- Restricted connectivity

- Non-backlit keyboard

Our Verdict

The Asus ExpertBook B3 has a good show and spectacular battery life, however the sluggish efficiency actually holds it again.

What can I say? I like a tiny pc. It looks as if solely yesterday that there have been bizarre, low cost Home windows machines galore, utilizing small screens anyplace from 7 to 10 inches. A few of these tablets had energetic pens and nearly all of them have been extremely sluggish or had a washed-out display screen or another horrible shortcoming. However, for those who have been keen to attend for his or her sluggish chips to chug alongside, they might be “good enough” in a pinch when operating previous apps or easy Workplace duties.

That’s why the brand new Asus Expertbook B3 appeared so intriguing. Not solely is that this a small pc with a kickstand and a keyboard cowl, it’s a tiny gadget with a pen that neatly tucks away right into a silo. It’s even primarily based on a more moderen Qualcomm Snapdragon 7c Gen 2 processor, which guarantees power-sipping, fanless operation. Can this little pill overcome its modest spec sheet and provides customers a good Home windows 11 expertise? In a phrase, no.

Asus ExperBook B3 Removable: Specs and options

Our assessment unit got here outfitted with a Qualcomm Snapdragon 7c Gen 2 CPU, Adreno 618 graphics, 4GB of RAM, and 128GB of eMMC storage. Learn on to be taught extra:

- CPU: Qualcomm Snapdragon 7c Gen 2 (8 cores)

- Reminiscence: 4GB LPDDR4

- Graphics/GPU: Adreno 618 graphics

- Show: 10.5-inch IPS WUXGA (1920×1200) 16:10 touchscreen

- Storage: 128GB eMMC

- Webcam: 5MP front-facing/1080p for video

- Connectivity: 1x USB-C 3.2 Gen 1, 1x 3.5mm headset jack

- Networking: Wi-Fi 5, Bluetooth 5.1

- Digital Pen: MPP 2.0, costs from gadget

- Battery capability: 38Wh

- Dimensions: 14.29 (W) x 9.36 (D) x 0.70 (H) inches

- Weight: 1.31 kilos (tablet-only), 2.22 kilos (pill with keyboard and stand), 2.79 kilos with charger

- Value: $599.99

Asus ExpertBook B3 Removable: Design and construct

IDG / Brendan Nystedt

Microsoft’s Floor mainly invented the trendy 2-in-1, placing the keyboard cowl front-and-center and balancing the entire widget on a kickstand across the again. The ExpertBook B3 is an analogous design, besides the kickstand isn’t built-in. As an alternative, it’s a bit of fabric-wrapped plastic that attaches with magnets to the pill. What this allows is a mode that allows you to stand it up in vertical orientation, not simply horizontally.

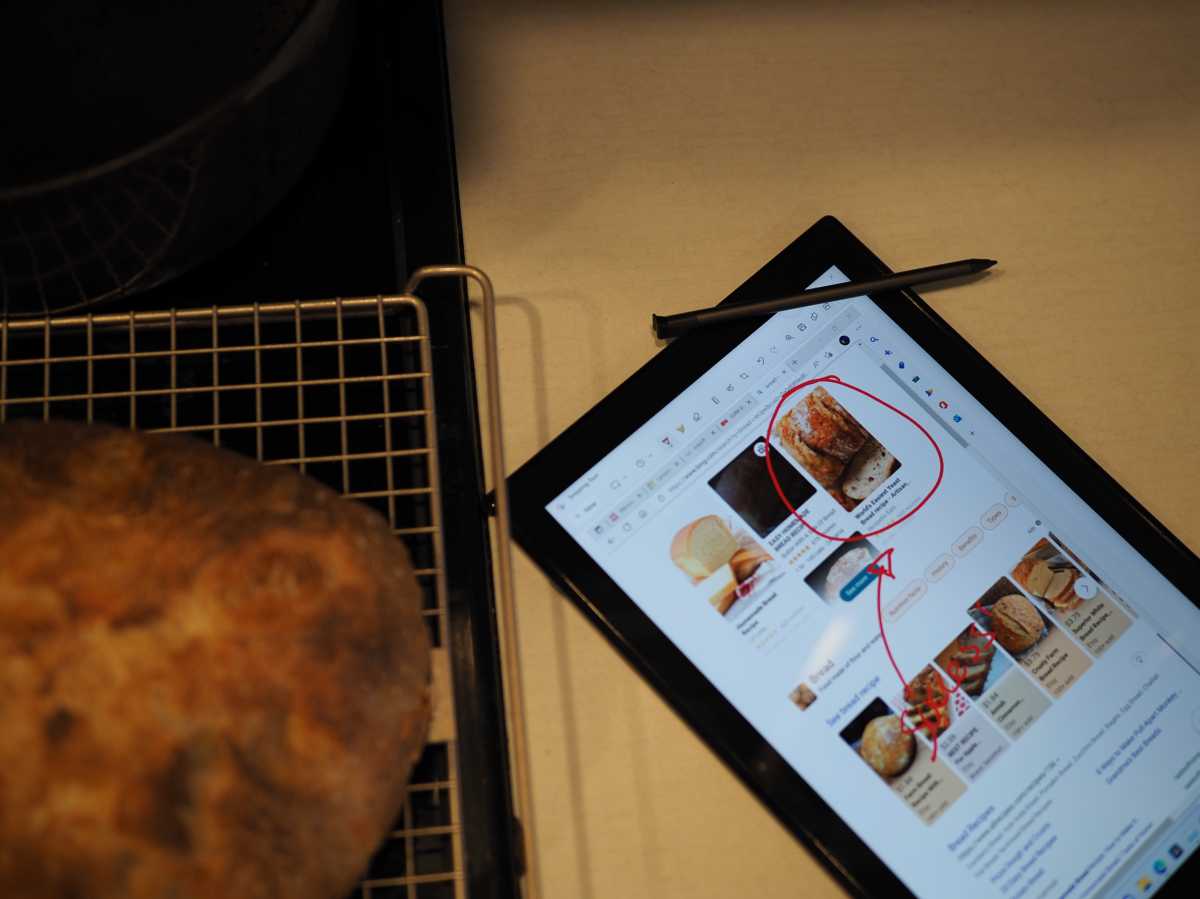

Sadly, I discovered the stand to be loosely linked besides in its horizontal orientation with the keyboard connected. You definitely can’t use it as an easel for drawing with this stand–the angle it folds to is much too shallow to get it all the way down to the tabletop. After which when you have got it in vertical mode, there’s no solution to join the keyboard and the USB port is inaccessible, beneath the sting that’s going through the tabletop. I can see this diploma of flexibility being useful is you employ your pill within the kitchen as a cookbook, however in any other case it looks as if a variety of engineering and bulk for an edge case.

No less than Asus consists of the kickstand attachment and keyboard cowl within the field, so that they’re not separate purchases. However the entire bundle feels a bit cumbersome when all put collectively, however the pill by itself is impressively slim.

Asus ExpertBook B3 Removable: Connectivity

Given its small measurement, we will’t say we have been shocked to search out solely two ports on this Asus pill–a typical headset jack and a USB-C port. The USB-C port pulls double responsibility as your solely charging port and the only possibility for I/O. No less than there’s an enormous market on the market for dongles and hubs, making it easy to show one port right into a bunch of others, together with display-out.

When it comes to wi-fi functionality, the B3 has Wi-Fi 5 and Bluetooth 5.1 as default, making it a bit behind the curve on Wi-Fi tech however completely usable for day-to-day duties.

It could have been very nice to see a microSD card slot on this pill as nicely, which will help make up for a scarcity of storage, however alas you’re caught with the 128 GB eMMC this pill has built-in. It looks as if because it has a US MIL-STD 810H sturdiness ranking that Asus eliminated as a lot as attainable to scale back the chance for failure. All issues thought-about, the B3’s connectivity undoubtedly seems like a downgrade from one thing like a Floor Go 3, which has a magnetic dock/charging port, USB-C, headset jack, in addition to a microSD card slot.

Asus ExpertBook B3 Removable: Keyboard, trackpad, and pen

IDG / Brendan Nystedt

One of many worst issues about shopping for a Microsoft Floor is that the pen and keyboard cowl are separate purchases. Right here, the whole lot comes within the field. I discovered, nonetheless, that the equipment which are essential to show this pill right into a laptop computer are a decidedly combined bag. The keyboard is okay, however the keys are undoubtedly small in comparison with, let’s say, a 13-inch class laptop computer or pill. Whereas the within of the keyboard cowl is plastic, the entire thing feels barely bouncy to sort on, which is lower than preferrred in my eyes. Then, when the lights exit you uncover–gasp!– these keys aren’t backlit! I hate to place a bunch of expectations on this little gadget however once you use one thing referred to as ExpertBook, you are likely to count on a better-than-basic typing expertise.

Beneath the keyboard is a dinky touchpad that I didn’t fairly get together with. It’s responsive sufficient, however the floor feels plasticky and tough. Moreover, it’s so small on this keyboard cowl that I discovered it was tremendous straightforward to hit the sides with the palms of my fingers. Lastly, the floppiness of the keyboard cowl made it straightforward to by accident set off the trackpad’s click on. This may be an edge case for a tool designed for colleges and companies, however this was one thing I observed when utilizing the gadget on the couch, when it has little help beneath the keyboard and trackpad.

IDG / Brendan Nystedt

One of many extra distinctive facets of the Asus B3 is that’s has an included digital pen that has its personal silo for storage–no magnets, no hidey gap within the keyboard cowl. The pen costs when changed into the again of the show, which is fairly nice. For notes, this pen works nicely sufficient however I’d skip this pill for artwork functions on account of some noticeable jitter in strains I drew. That and the pen’s less-than-ergonomic skinny physique make this a pleasant bonus, however removed from a star attraction.

Asus ExpertBook B3 Removable: Show, audio, and webcam

IDG / Brendan Nystedt

The one side of this gadget I’ve a slight gripe with is the small show and massive bezels. The display screen has a piano black encompass, after which a giant black border. Earlier than you flip it on, you’d count on that the display screen would fill the within of the black plastic border, however as an alternative it’s nested method contained in the glass with a thick border doesn’t look tremendous premium in 2022.

No less than the display screen is nice to behold. The Asus B3’s dinky 10.5-inch show is a excessive 1920×1200 decision, appears to be like vibrant from each angle, and reaches a shiny and constant 350 nits. The shiny floor would possibly decide up glare or make it laborious to see in direct daylight, however in some other situations I beloved the way in which it appears to be like. It’s additionally a laminated show, which might make the interplay with the display screen really feel extra direct than one with a niche between the duvet glass and the show itself beneath (digital pen customers will discover the distinction!). The punchy colours, good distinction, and crisp pixel density make this a tool that’s a minimum of good to take a look at, even when it’s maddening to make use of in different regards.

The entrance going through digital camera on the B3 is fairly good all issues thought-about. It’s a far cry from the 720p and 1080p models in a variety of laptops, truly letting you get 5 megapixel stills. For video calls, the upper megapixel digital camera helps however I nonetheless seemed slightly blotchy in something lower than shiny gentle. The audio system and microphone are each first rate. That mentioned, the audio system are a bit quiet and would possibly wrestle in a chatty classroom or workplace setting

Asus ExpertBook B3 Removable: Efficiency

If there’s one frustratingly weak level for the Asus ExpertBook B3, it’s efficiency. This tiny pill has a good sufficient Snapdragon 7c Gen 2 processor, however the sluggish stable state drive and restricted RAM severely restrict its talents. This factor seems like utilizing Home windows 11 whereas it’s caught in quicksand.

In use, B3 takes without end in addition up, as if it’s rising from a deep sleep, and Edge must sleep browser tabs continually to release reminiscence. Sitting right here with OneNote and 4 browser tabs open, Process Supervisor says 3.3 GB of RAM are in use…yikes! Even belongings you’d wish to be snappy and satisfying, like urgent the Begin button on the touchscreen lag.

In case you didn’t know a lot about computer systems, you’d suspect one thing was flawed. It’s made worse by the lackluster means of Home windows 11 Professional to emulate older apps. Despite the fact that it ought to minimize the mustard in relation to light-weight mobile-friendly software program, I discovered the B3 rendered an indie sport like Donut County unplayable beneath emulation. However don’t take my phrase for it–let’s check out some benchmarks.

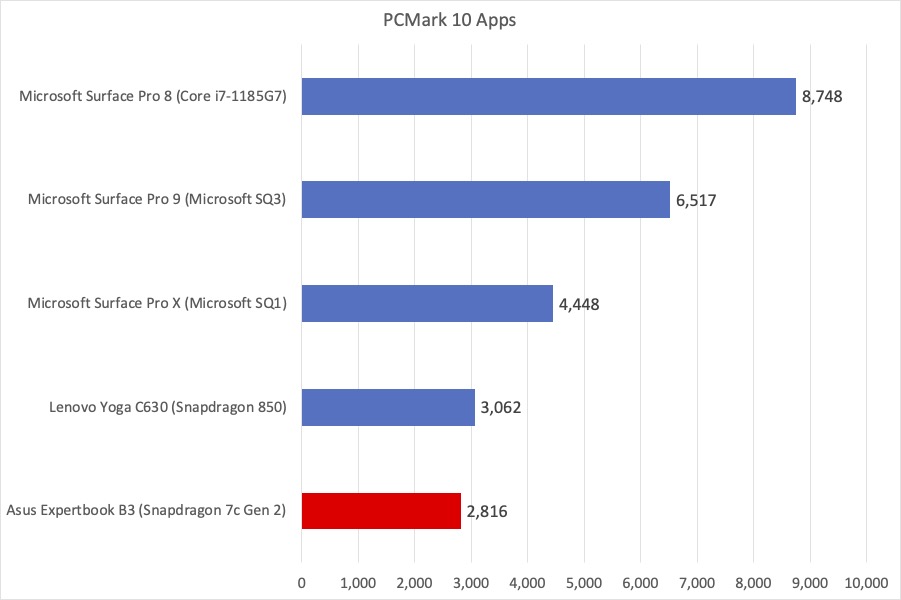

Within the PCMark 10 Apps check, which runs natively on Snapdragon gadgets just like the Asus B3, we noticed a rating that was lower than spectacular. In actual fact, it missed the usual of a Lenovo Snapdragon-based gadget that’s nearly 4 years previous. For the sake of comparability, we’ve included a Floor Professional from final yr operating a premium Intel chip simply to display how sluggish this pill is. This check makes use of Microsoft’s personal apps to run totally different process with a purpose to measure the responsiveness of the system in Workplace and Edge situations.

IDG / Brendan Nystedt

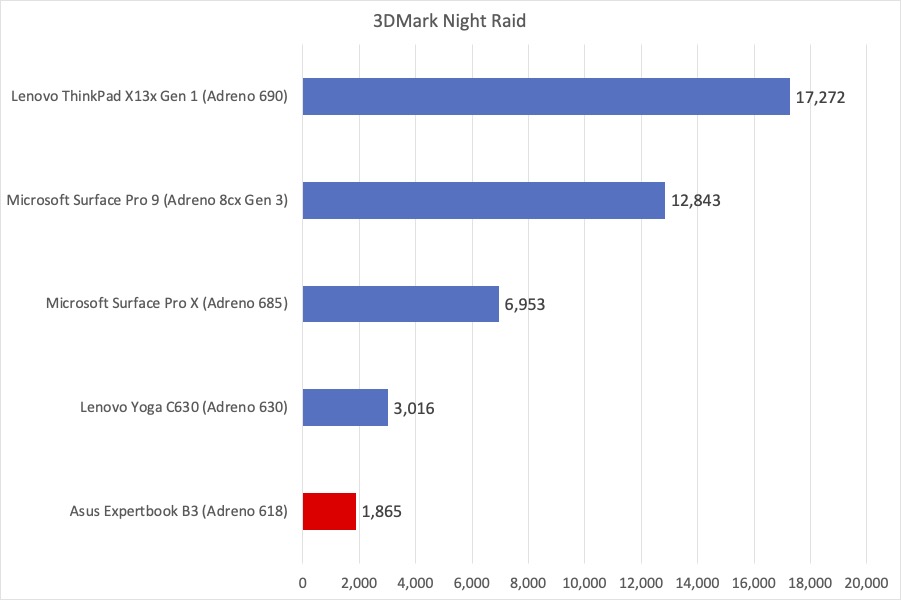

The Asus ExpertBook B3 turned in a disappointing displaying when its graphics have been put to the check within the ARM-friendly Evening Raid benchmark. This poor efficiency makes a specific amount of sense, nonetheless, since we’re coping with a less expensive Snapdragon chip with a worse GPU. The remainder of the bunch we had examined all had that yr’s finest graphics, which this decidedly doesn’t.

IDG / Brendan Nystedt

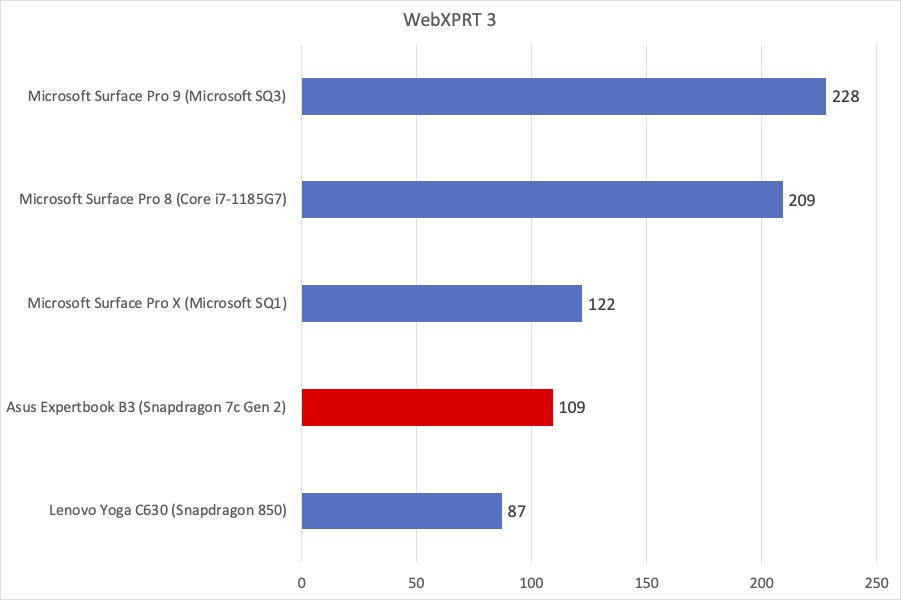

Since internet shopping is a vital a part of at the moment’s computing expertise, we ran the WebXPRT3 benchmark to get an sense for a way this method performs with a wide range of duties within the Edge browser. Though the B3 outperformed the older Lenovo Yoga C630 on this check, it’s nonetheless a poor performer on the entire.

IDG / Brendan Nystedt

Asus ExpertBook B3 Removable: Battery life

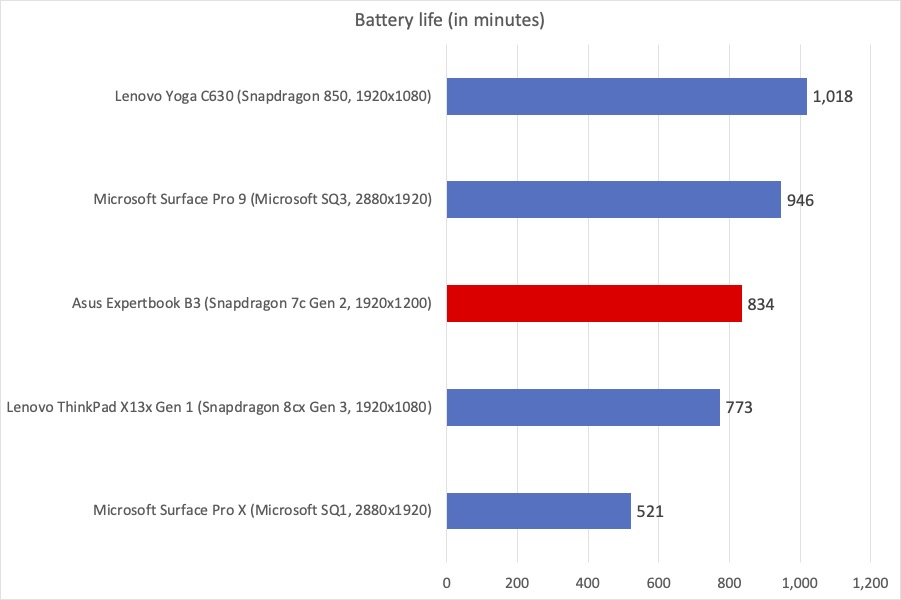

One shiny spot within the efficiency checks was the long-lasting battery. In our looping 4K video check, the Asus ExpertBook B3 almost made it to 14 hours of runtime, which is stellar for a tiny Home windows pill like this. Anecdotally, whereas penning this assessment on the Asus, I noticed the battery drain round 5% for an hour of sunshine work.

IDG / Brendan Nystedt

Do you have to purchase the Asus ExpertBook B3 Removable?

On the finish of my time with the Asus ExpertBook B3, I used to be left with one query: who is that this for? The advertising and marketing makes it seem to be it’s for frontline staff or the training market, however I’d really feel dangerous handing this off to an worker and even worse for the youngsters caught with this in a pc lab. It’s additionally out there on Finest Purchase for anybody to buy, which I’d advise towards until it’s deeply discounted. Even with out considering of competing choices, there’s not a terrific case for its $600 worth.

Even with stellar battery life, included equipment, and a pleasant display screen, the Asus B3 reminds us that Home windows on a Snapdragon chip can nonetheless have a variety of drawbacks, particularly in a less expensive configuration like this. Certain, the general expertise has improved so much on the high-end with the Floor Professional 9 and ThinkPad X13s Gen 1, however for now it’s possible you’ll wish to keep away from these low-end Snapdragon Home windows PCs.